In a striking departure from Silicon Valley’s spend-big philosophy, Anthropic President Daniela Amodei outlined a radical efficiency-over-scale strategy in a January 3 CNBC interview, positioning the AI startup against OpenAI in the industry’s defining infrastructure arms race.

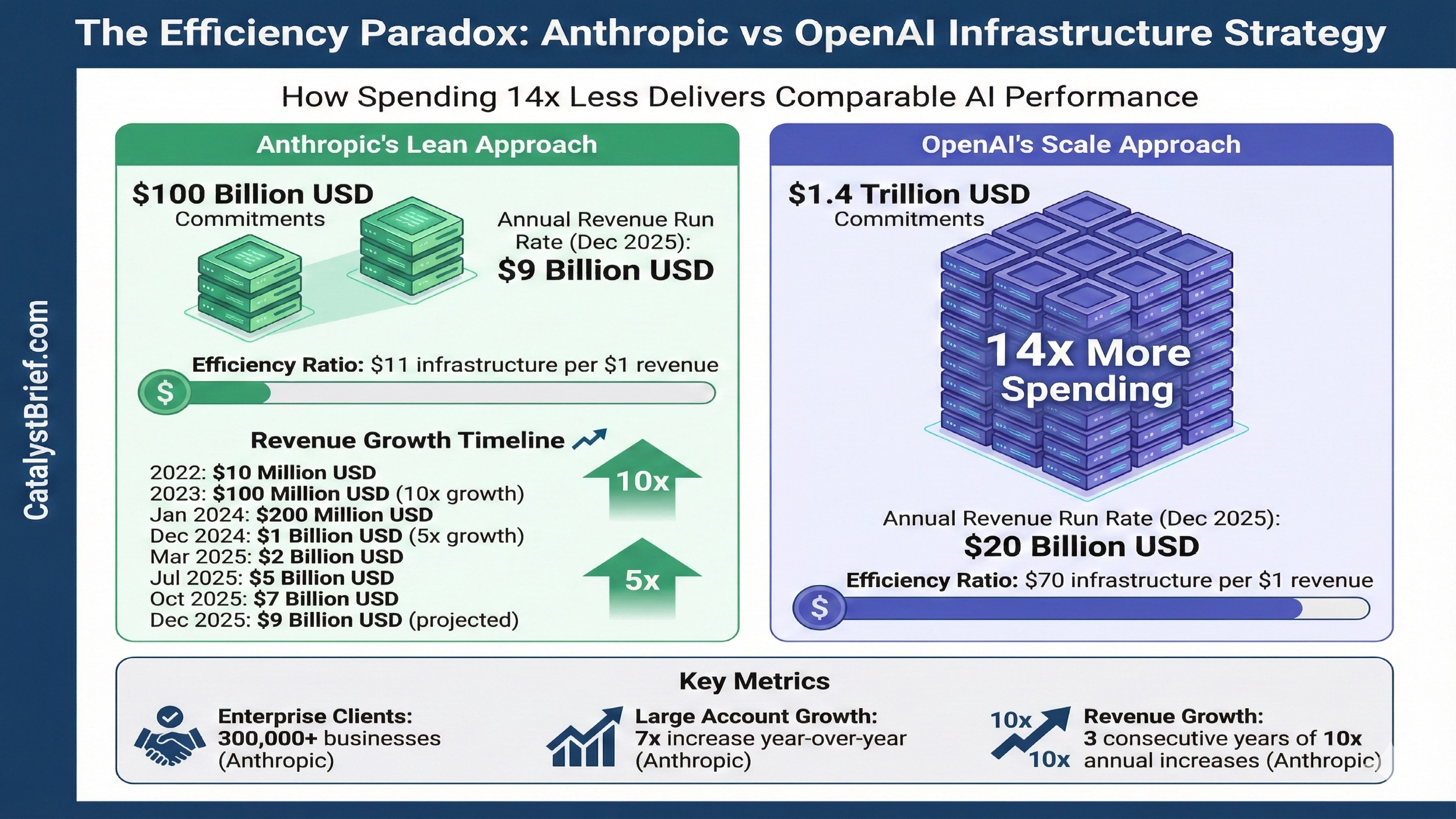

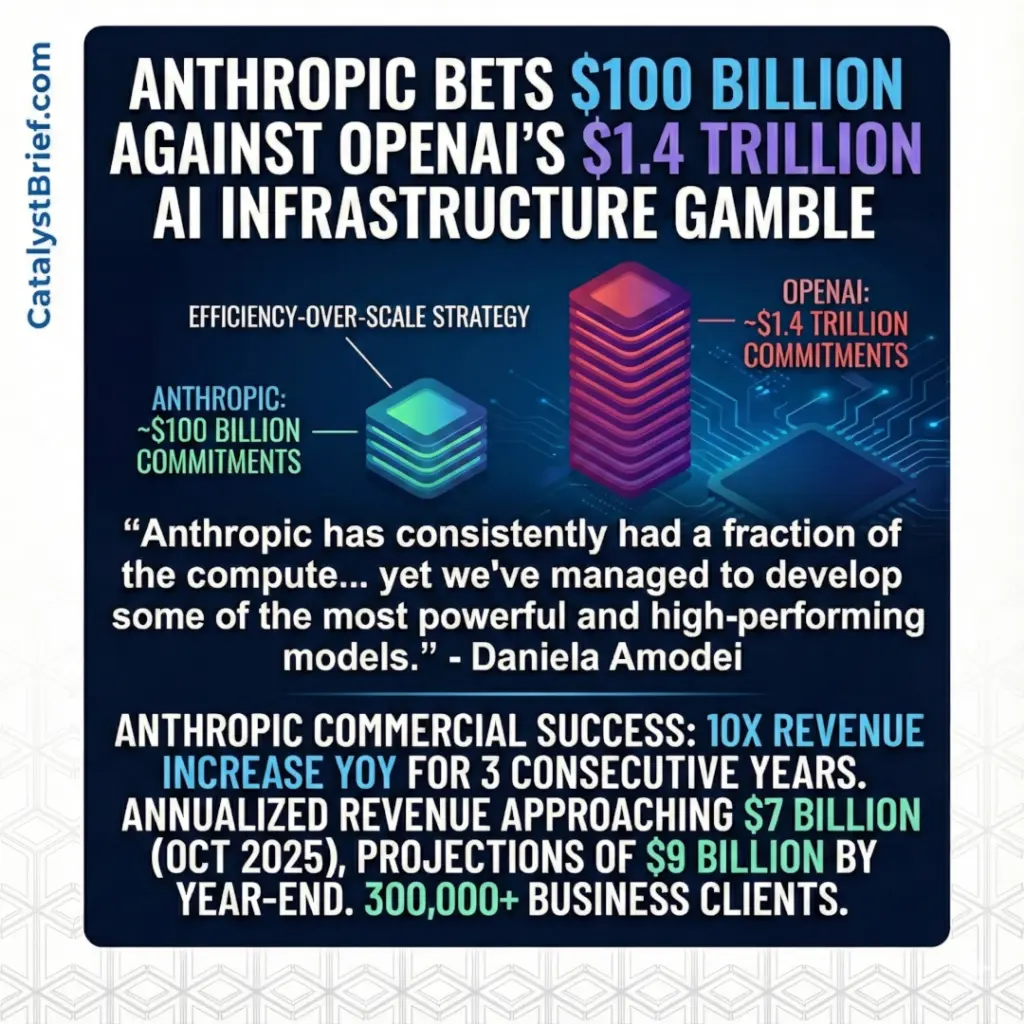

While OpenAI commits approximately $1.4 trillion to compute over eight years, Anthropic operates with roughly $100 billion in commitments, focusing instead on algorithmic efficiency and output per dollar spent.

“Anthropic has consistently had a fraction of the compute and capital compared to our rivals, yet we’ve managed to develop some of the most powerful and high-performing models over the past several years,” Daniela Amodei told CNBC. The numbers validate her confidence. Despite spending 14 times less than its primary competitor, Anthropic has achieved explosive commercial success.

Efficiency Driving Unprecedented Growth

The company reported tenfold revenue increases year-over-year for three consecutive years, with annualized revenue approaching $7 billion USD in October 2025 and projections of $9 billion USD by year-end. Anthropic targets $20 billion USD to $26 billion USD for 2026, fueled by more than 300,000 business clients.

This trajectory challenges the fundamental assumption driving the AI arms race. Ironically, the Amodei siblings helped pioneer the scaling paradigm their company now questions. Dario Amodei, Anthropic’s CEO, was among the OpenAI researchers who popularized scaling laws, believing that increasing compute, data, and model size improves performance predictably.

That pattern has become the financial bedrock justifying sky-high valuations across the AI sector. It explains why cloud providers spend massively on infrastructure, why chip manufacturers command premium stock prices, and why private investors assign enormous valuations to money-losing AI companies.

Enterprise Efficiency Advantage

For enterprises choosing AI providers, Anthropic’s approach addresses critical pain points. Algorithmic efficiency translates to lower API costs per output. Operating across multiple cloud platforms (Google TPUs, Amazon Trainium, Nvidia GPUs) provides vendor flexibility. Resource focus on reliability and safety aligns with compliance requirements in regulated industries.

The market has responded. Large accounts generating over $100,000 USD annually grew nearly sevenfold in the past year, with enterprise clients driving roughly 80 percent of revenue.

The Billion-Dollar Question

“The exponential continues until it doesn’t,” Daniela Amodei noted, highlighting the central uncertainty facing both strategies. If AI capabilities continue scaling predictably with compute investment, OpenAI’s massive commitments could establish an insurmountable lead. But if algorithmic improvements matter more than raw computational power, or if scaling returns begin diminishing, Anthropic’s lean approach could prove prescient.

The stakes extend beyond these two companies. The AI industry faces compute demand growing twice as fast as Moore’s Law, potentially requiring $500 billion USD annually through 2030. Whether efficiency or scale wins this arms race will determine which companies survive, how much infrastructure gets built, and ultimately whether AI delivers transformative value or becomes this generation’s cautionary tale about excess capital chasing uncertain returns.

For enterprises deploying AI, the practical takeaway is clear: evaluate providers not just on model performance, but on cost efficiency, deployment flexibility, and whether their infrastructure strategy aligns with realistic business economics. The company spending 14 times less while achieving comparable results might be placing the smarter bet.