Next week’s dueling chip launches signal computing’s most significant architectural shift since the cloud era began.

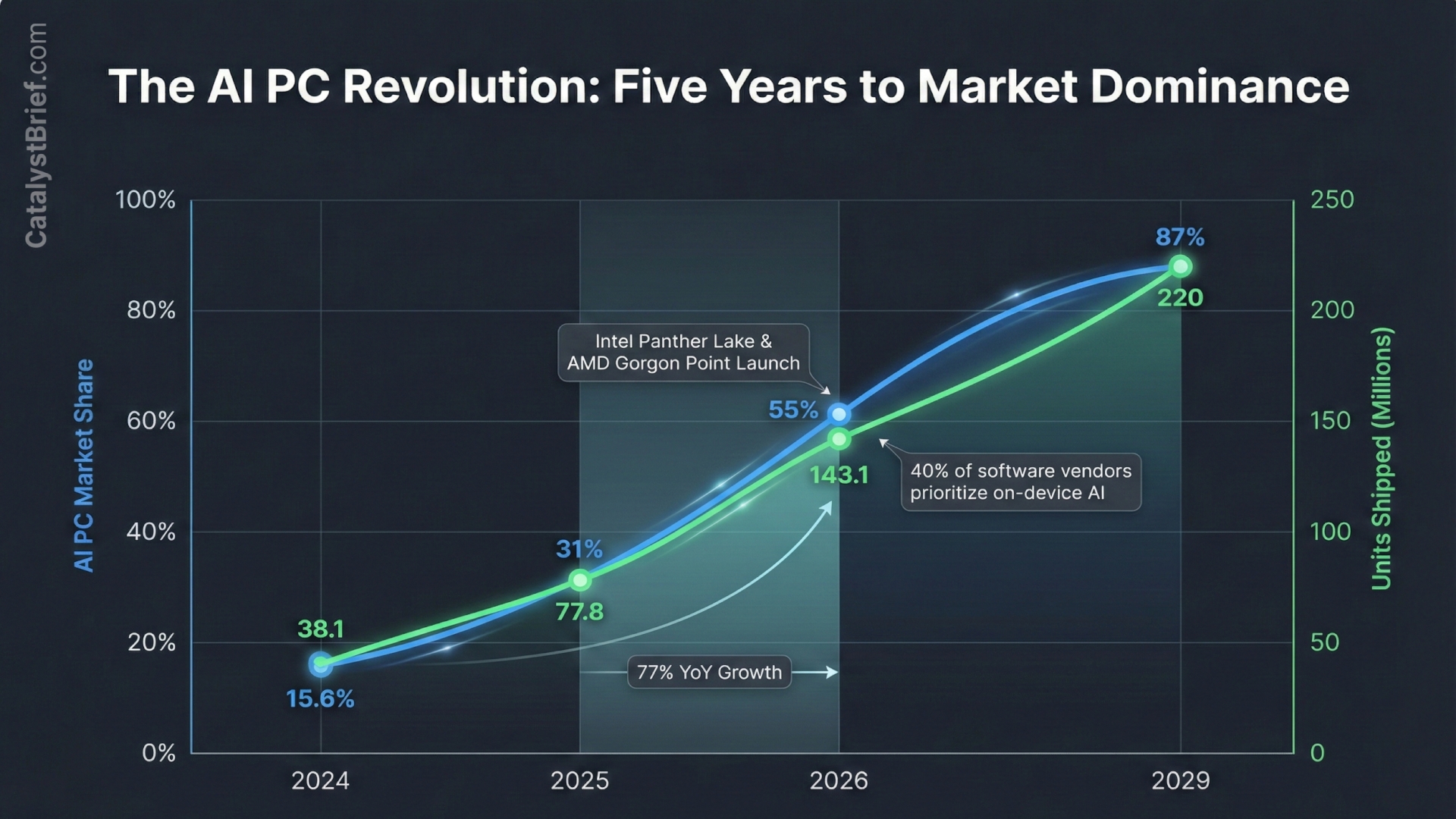

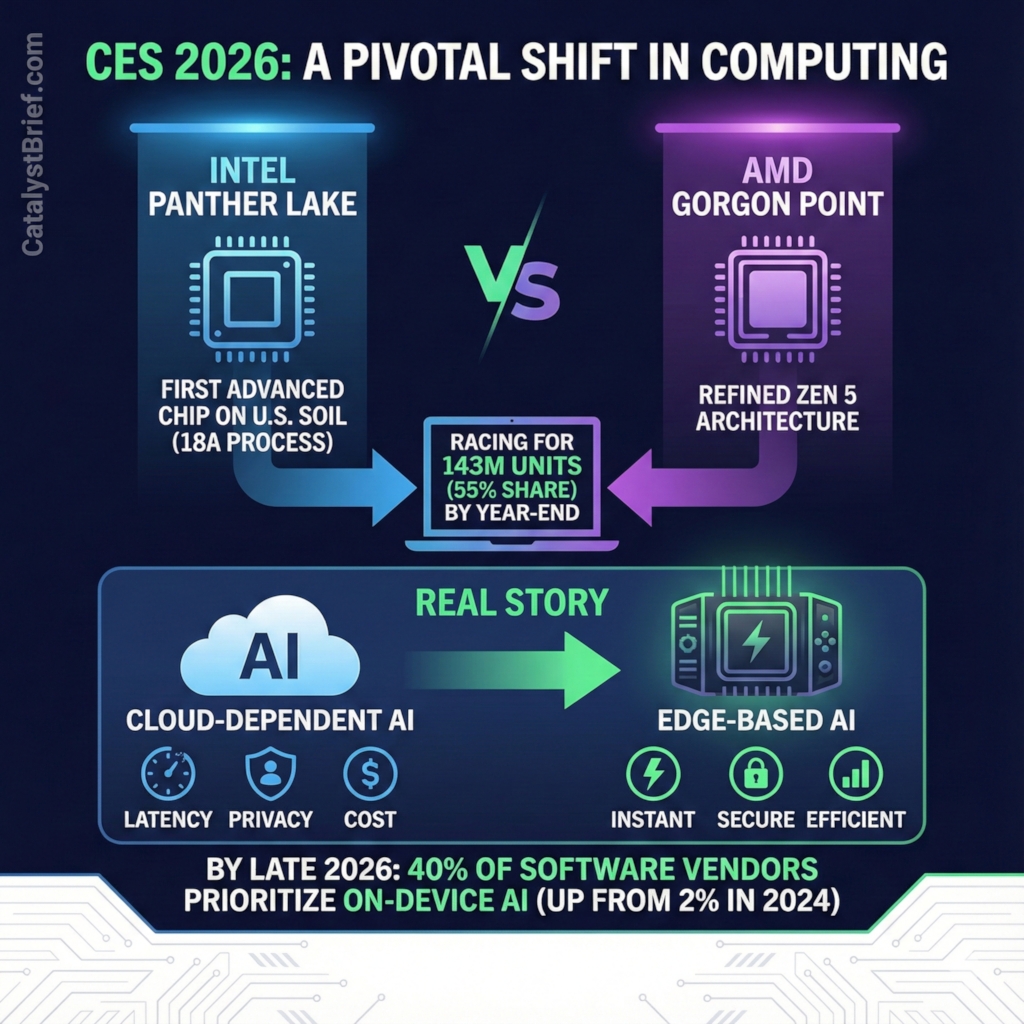

Intel launches its Core Ultra Series 3 processors on January 5 at 3PM Pacific in Las Vegas. AMD CEO Lisa Su delivers her keynote three hours later, unveiling the Ryzen AI 400 series. Both chipmakers race to capture the AI PC segment, projected to dominate more than half the global market within 12 months.

The competition reflects a fundamental transformation in how artificial intelligence reaches users. For years, AI capabilities lived primarily in cloud data centers, requiring constant connectivity and raising concerns about latency, privacy, and costs. These processors flip that model, embedding neural processing units directly into consumer hardware for local AI without internet dependence.

Intel’s Panther Lake represents a watershed moment. The chips mark the first consumer products built on Intel’s advanced 18A manufacturing process, the most sophisticated semiconductor node developed and manufactured domestically in the United States. Over 70 percent of production happens at Intel’s Fab 52 facility in Chandler, Arizona, reducing supply chain vulnerabilities. Laptops featuring these processors ship during Q1 2026.

AMD’s Gorgon Point builds on Strix Point architecture with up to 12 Zen 5 CPU cores, RDNA 3.5 graphics, and an upgraded neural processing unit. While incremental, these refinements position AMD to maintain competitive pressure across the premium laptop segment.

Gartner projects AI PCs will command 55 percent of the global market by year-end 2026, totaling 143 million units. More significantly, 40 percent of software vendors will prioritize AI capabilities executing directly on PCs by late 2026, up from just 2 percent in 2024. Applications from video editors to cybersecurity tools will increasingly rely on on-device neural processors rather than cloud infrastructure.

Why This Matters

Edge-based AI computing solves critical deployment problems. Cloud-dependent AI introduces latency making real-time applications impractical. Privacy concerns arise when sensitive data travels to remote servers. Enterprises face escalating costs as AI workloads consume expensive data center resources. Local processing addresses all three simultaneously.

Small language models exemplify the opportunity. These compact AI engines run entirely on laptop processors, enabling real-time transcription, intelligent photo editing, and contextual writing assistance without connectivity. Organizations deploy AI while maintaining data sovereignty, critical for regulated industries including healthcare and finance.

The manufacturing dimension carries geopolitical weight. Intel’s domestic 18A production represents the first advanced-node logic manufactured at scale within U.S. borders, fulfilling CHIPS Act commitments. As semiconductor supply chains face scrutiny, domestic production of cutting-edge processors reduces strategic vulnerabilities.

What To Watch

Performance benchmarks for video editing, 3D rendering, and real-time AI inference will determine which platform delivers superior value. Battery life becomes critical as neural processing units add power draws to constrained thermal envelopes.

Software ecosystem development will decide market winners. Hardware means little without applications optimized for on-device AI. Microsoft, Adobe, and others have begun announcing support, but broader developer adoption remains uncertain.

Memory shortages add complexity. Strong demand from AI data centers has constrained consumer supplies, potentially limiting production ramps. Supply constraints could dampen adoption regardless of technical merit.

Next week’s launches represent the beginning of multi-year transformation. The question is no longer whether AI computing will shift to edge devices, but how quickly and which companies will capture the value it generates.