Physical AI and world models converge at CES 2026 as robots transition from research labs to factory floors

The Brief

The News: CES 2026 (January 6-9) will showcase the commercialization of Physical AI, with China’s Unitree selling $16,000 USD humanoid robots, Intel launching its first 2-nanometer 18A process chips, and Samsung/LG battling over Micro RGB TV technology. Korean companies captured 60% of CES Innovation Awards, signaling Asia’s dominance in robotics manufacturing.

The Stats: Intel’s Panther Lake promises 50% faster CPU and GPU performance, Samsung’s Micro RGB TVs achieve 100% color gamut coverage across BT.2020, DCI-P3, and Adobe RGB, and Korea deployed 1,012 robots per 10,000 workers – the world’s highest robot density.

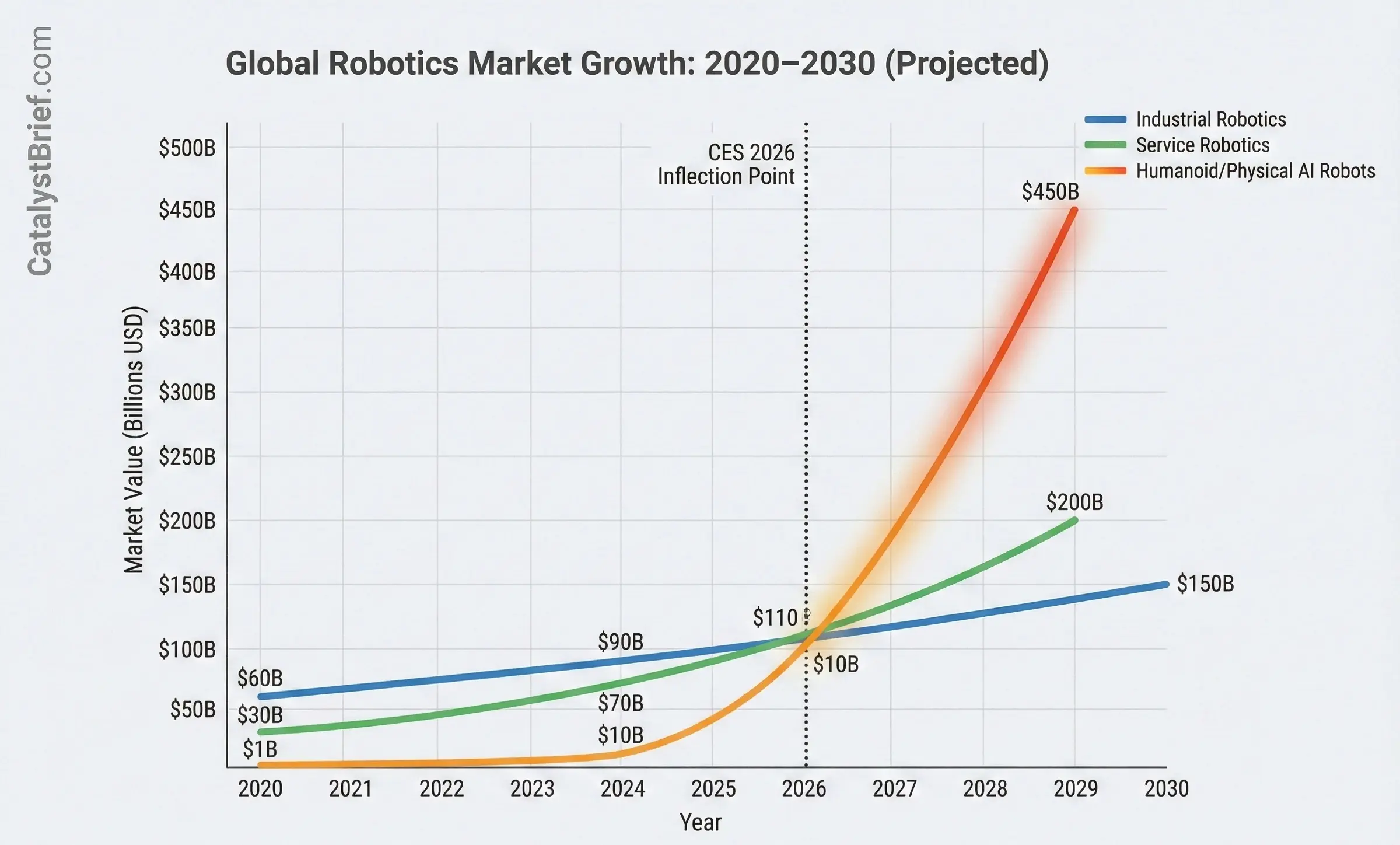

The Bottom Line: The gap between digital AI models and physical-world deployment is closing faster than most predicted. With affordable humanoid robots, production-ready vision-language-action models, and semiconductor breakthroughs enabling on-device AI processing, 2026 marks the inflection point where Physical AI transitions from laboratory curiosity to commercial inevitability.

The Catalyst: When Silicon Meets Reality

In a converted warehouse outside Shenzhen, a humanoid robot threads a needle with sub-millimeter precision, completing an embroidery pattern that would challenge experienced seamstresses. The machine costs less than a year’s salary for the worker it might replace. Welcome to the economics of Physical AI.

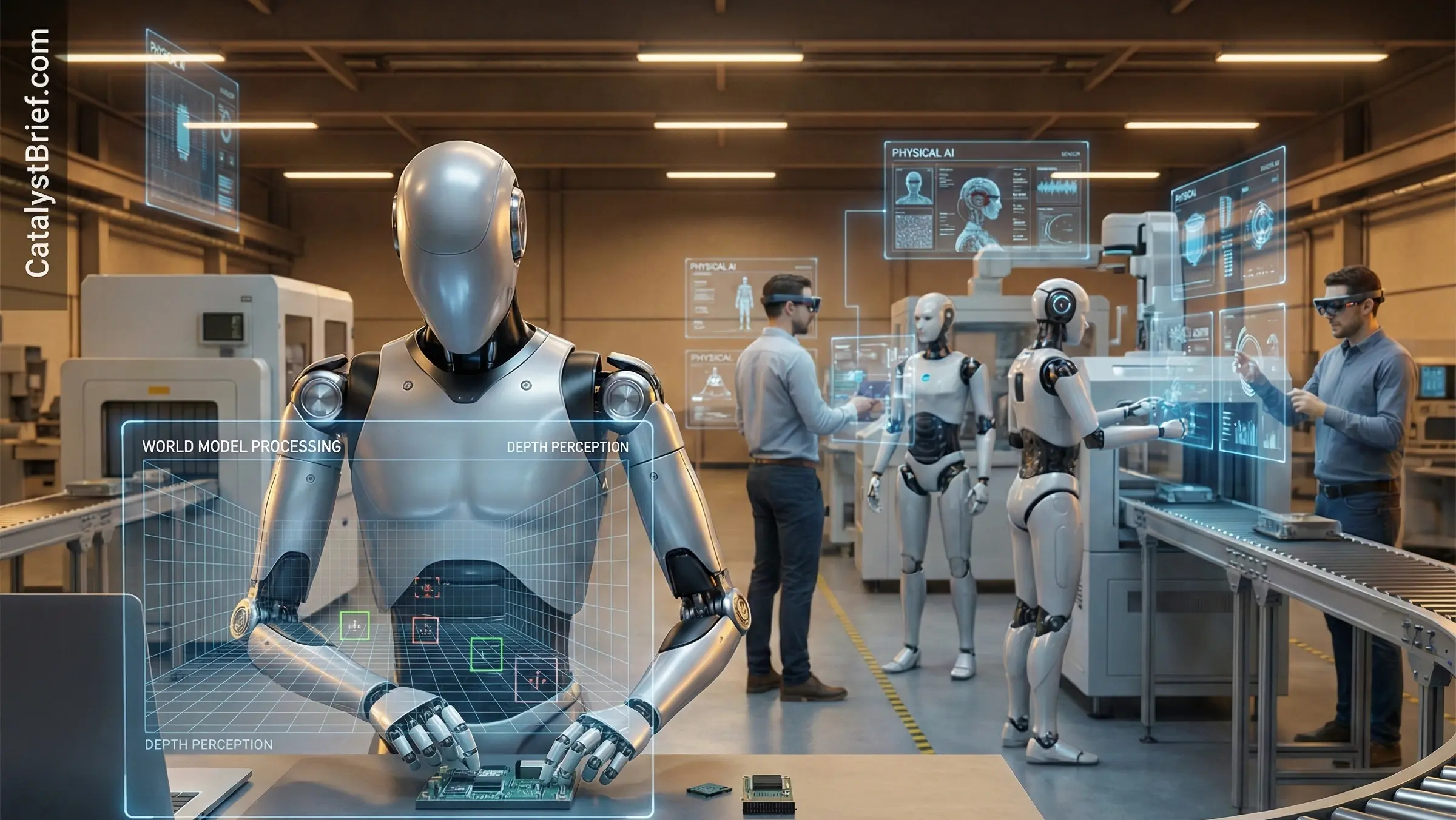

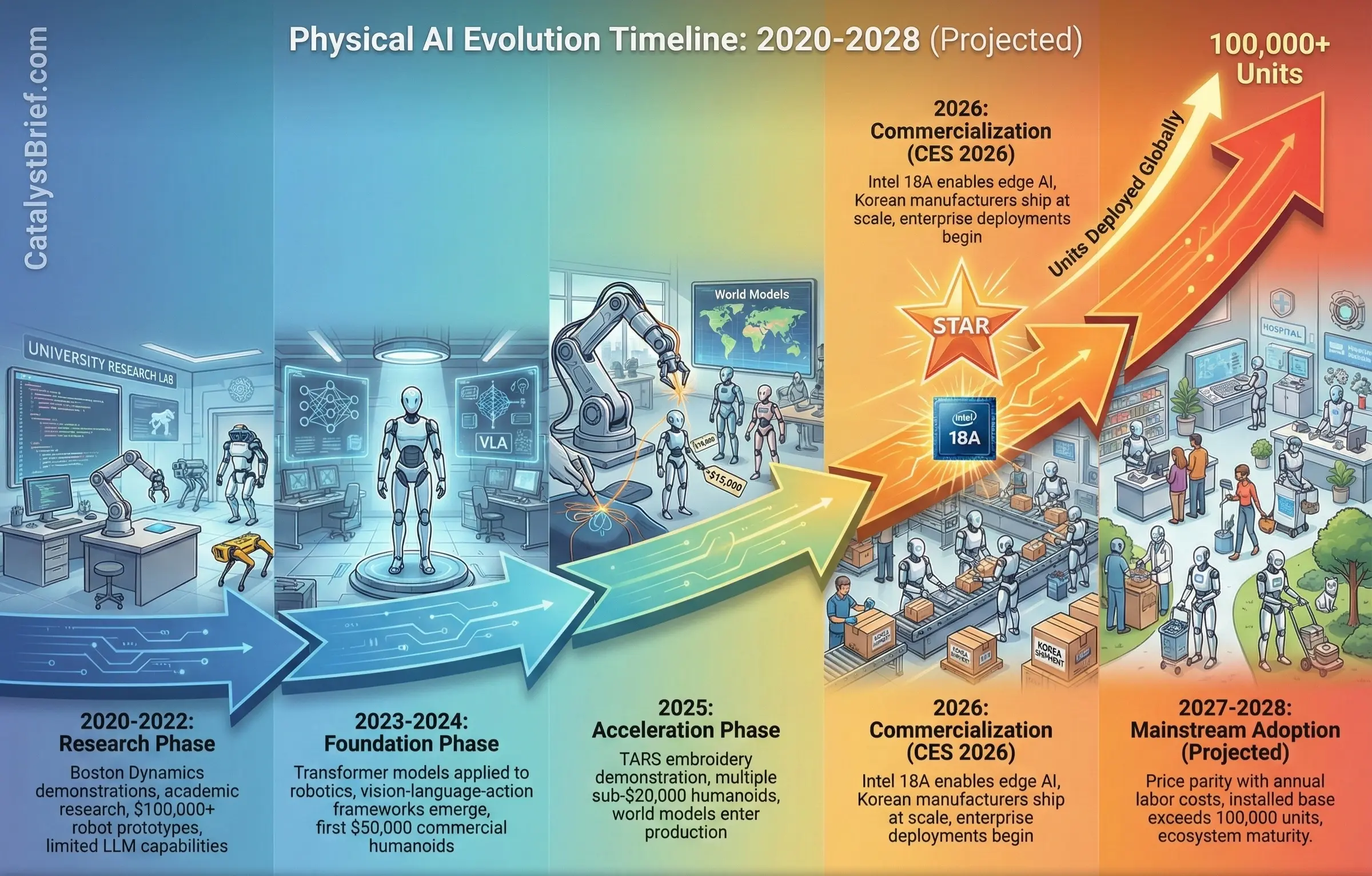

This breakthrough from TARS Robotics demonstrated in December 2025 captures the essence of what’s converging at CES 2026 in Las Vegas this January. While previous years showcased AI as software novelty, the 2026 edition represents something fundamentally different: the collision of advanced silicon, vision-language-action models, and commercially viable robotics. The result isn’t just better gadgets. It’s the emergence of machines that can perceive, reason, and act in the physical world at price points that rewrite manufacturing economics.

The Semiconductor Foundation: Intel Bets the Foundry on 18A

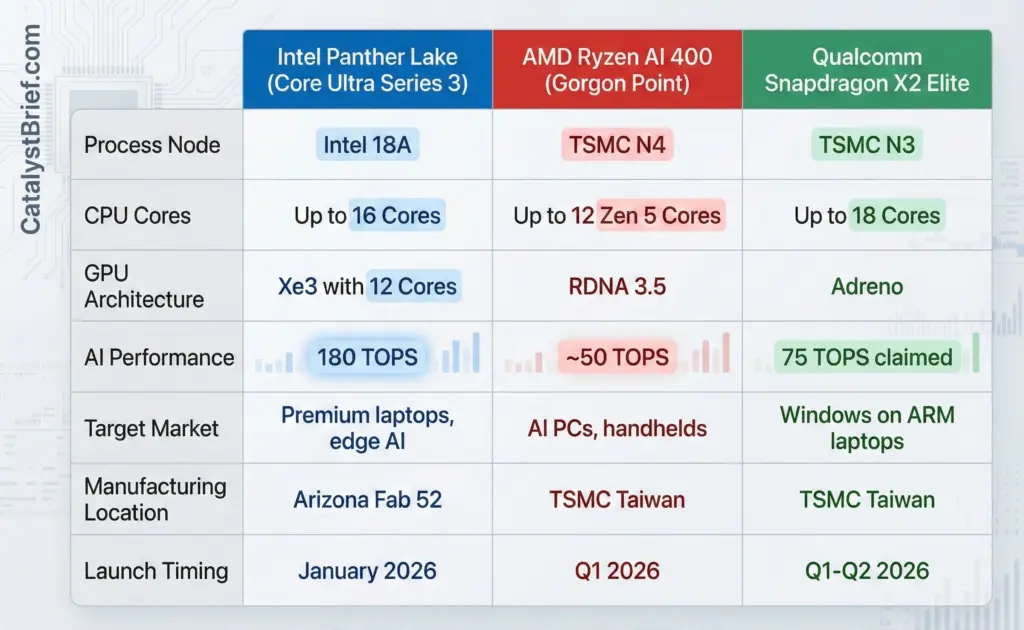

On January 5, Intel will launch its Core Ultra Series 3 “Panther Lake” processors at CES 2026. This isn’t merely another chip refresh. Panther Lake represents Intel’s first consumer processor manufactured on its 2-nanometer 18A process node, described by the company as “the most advanced semiconductor process ever developed and manufactured in the United States.”

The stakes transcend technical achievement. After years of ceding manufacturing leadership to TSMC, Intel CEO Lip-Bu Tan has staked the company’s foundry future on 18A’s success. Production is already underway at Fab 52 in Chandler, Arizona, with the facility representing billions in capital investment and America’s attempt to reclaim semiconductor manufacturing sovereignty.

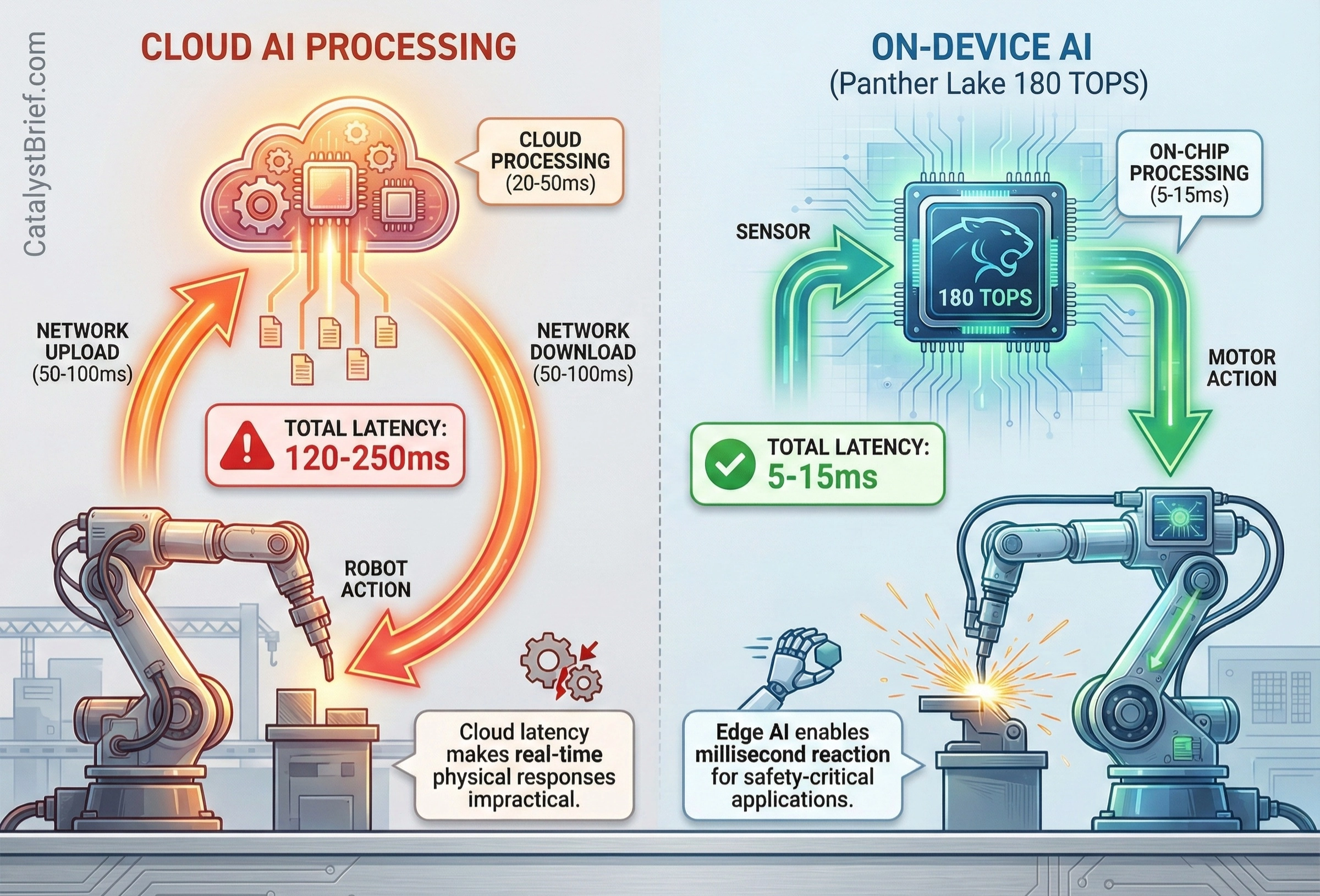

Panther Lake’s architecture reveals Intel’s Physical AI ambitions. The chip combines new Cougar Cove performance cores with Darkmont efficiency cores and features up to 12 Xe3 integrated GPU cores delivering claimed 50% faster graphics performance versus previous generations. More critically, the processors incorporate a 5th-generation Neural Processing Unit capable of up to 180 TOPS of AI performance.

Why does this matter for robotics? On-device AI processing eliminates cloud dependency for split-second decisions. A factory robot adjusting to production schedule changes or an autonomous vehicle detecting cyclists requires latency measured in milliseconds, not the hundreds of milliseconds introduced by cloud round-trips. Intel specifically highlighted robotics applications for Panther Lake, unveiling a new Intel Robotics AI software suite designed to enable cost-effective robot development.

But Intel isn’t operating in vacuum. AMD CEO Dr. Lisa Su will deliver the opening keynote at CES 2026 on January 5, outlining AMD’s vision for AI solutions spanning cloud to edge devices. The company’s Ryzen AI 400 series chips codenamed “Gorgon Point” feature up to 12 Zen 5 CPU cores with RDNA 3.5 graphics, targeting AI PC and handheld gaming markets where power efficiency determines viability.

Meanwhile, Qualcomm’s Snapdragon X2 Elite processors promise to demonstrate laptops at CES, with the X2 Elite Extreme variant offering up to 75% higher CPU performance than competing solutions at equivalent power levels. The chip wars aren’t about benchmark bragging rights. They’re determining which architectures will power the Physical AI revolution’s compute infrastructure.

World Models: Teaching Machines to Understand Physics

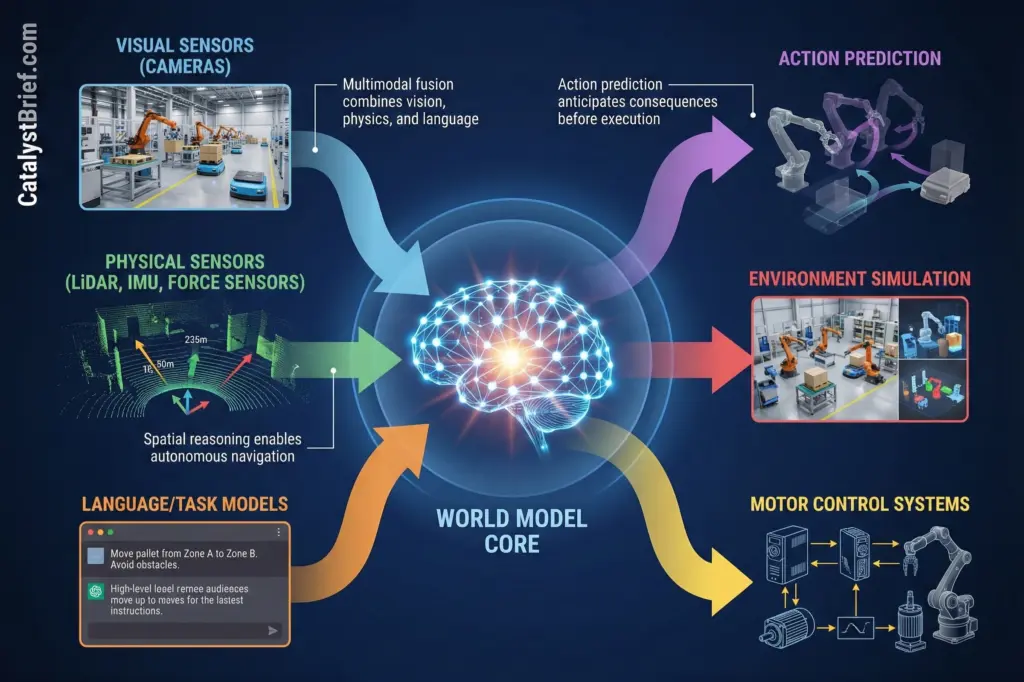

The semiconductor advances enable a crucial AI development that separates 2026 from previous years: world models. Unlike large language models that process text, world models aim to give AI systems deep understanding of physical space, enabling autonomous operation in unstructured environments.

Arm’s 2026 technology predictions describe world models as “a foundational tool for building and validating physical AI systems – from robotics and autonomous machines to molecular discovery engines.” The concept borrows training methodologies from LLMs while incorporating data describing the physical world.

Consider the distinction. A language model can describe how to navigate a factory floor. A world model enables a robot to actually navigate that floor, understanding that certain areas are off-limits during production shifts, that forklifts have right-of-way in specific corridors, and that the loading dock requires different navigation strategies when wet versus dry.

Vision-language-action models integrate computer vision, natural language processing, and motor control, functioning like the human brain to help robots interpret surroundings and select appropriate actions. These multimodal systems represent the missing link between digital intelligence and physical capability.

The proof points are multiplying rapidly. TARS Robotics in China demonstrated its DATA AI PHYSICS approach, creating a closed-loop system where real-world operational data trains the TARS AWE 2.0 AI World Engine, which then deploys capabilities directly to physical robots with minimal digital-to-physical gap. The company’s achievement – a humanoid robot performing hand embroidery requiring sub-millimeter precision – represents capabilities previously considered beyond automation’s reach.

Analog Devices’ AI predictions for 2026 highlight hybrid world models integrating mathematical and physical reasoning with data-driven sensor-fused dynamics. “Think of a mobile factory robot that can reason for itself and determine what to do when faced with an unexpected obstacle,” explains Ryan Golding, VP of AI at Analog Devices. “These systems will not only describe their environments but actively engage with them, learning from their own experience.”

The shift from description to action marks Physical AI’s fundamental transition. Previous robotics relied on explicit programming for every scenario. World models enable generalization – robots that can transfer learned skills across different environments and adapt to novel situations without human reprogramming.

The $16,000 Inflection Point: When Humanoids Become Economical

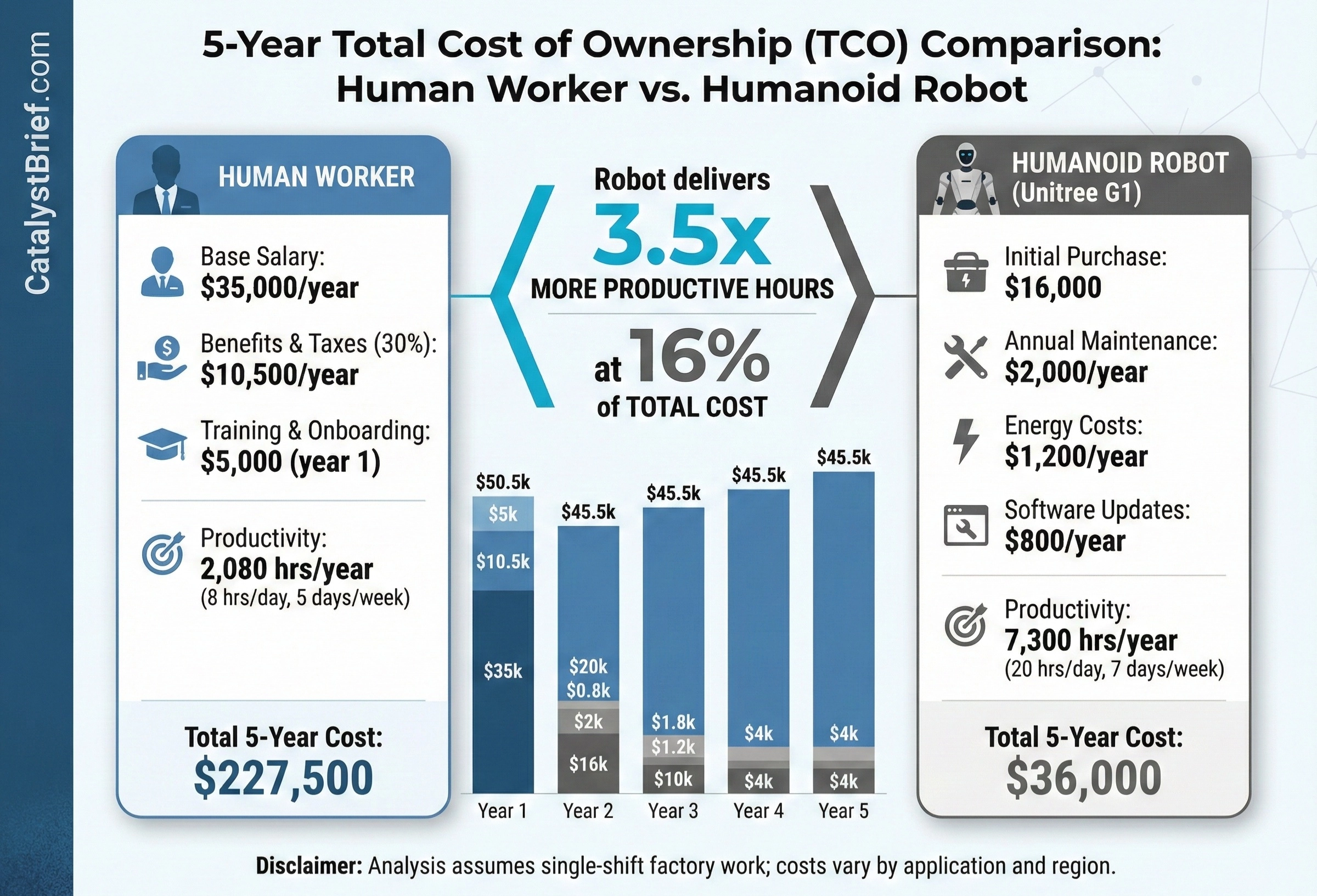

China’s Unitree Robotics will showcase its G1 humanoid robot at CES 2026, priced at $16,000 USD. At first glance, this appears incremental – another robot demonstration at a tech conference. The economic implications deserve closer examination.

A factory worker earning $35,000 USD annually represents roughly $50,000 USD in total compensation when including benefits, training, and overhead. The $16,000 USD robot works 24 hours daily without fatigue, doesn’t require benefits, and can be reprogrammed for different tasks as production needs shift. The return on investment calculation becomes straightforward for manufacturers.

But price alone doesn’t create viability. The robot must match or exceed human capability for specific tasks. This is where Physical AI’s recent breakthroughs matter. UK startup Humanoid will demonstrate its HMND 01 Alpha industrial robot, developed in seven months, standing 220 cm tall, moving at 7.2 km/h, and carrying 15 kg payloads. Japan’s MinebeaMitsumi will present humanoid solutions featuring high-torque micro actuators co-developed with harmonic drive systems.

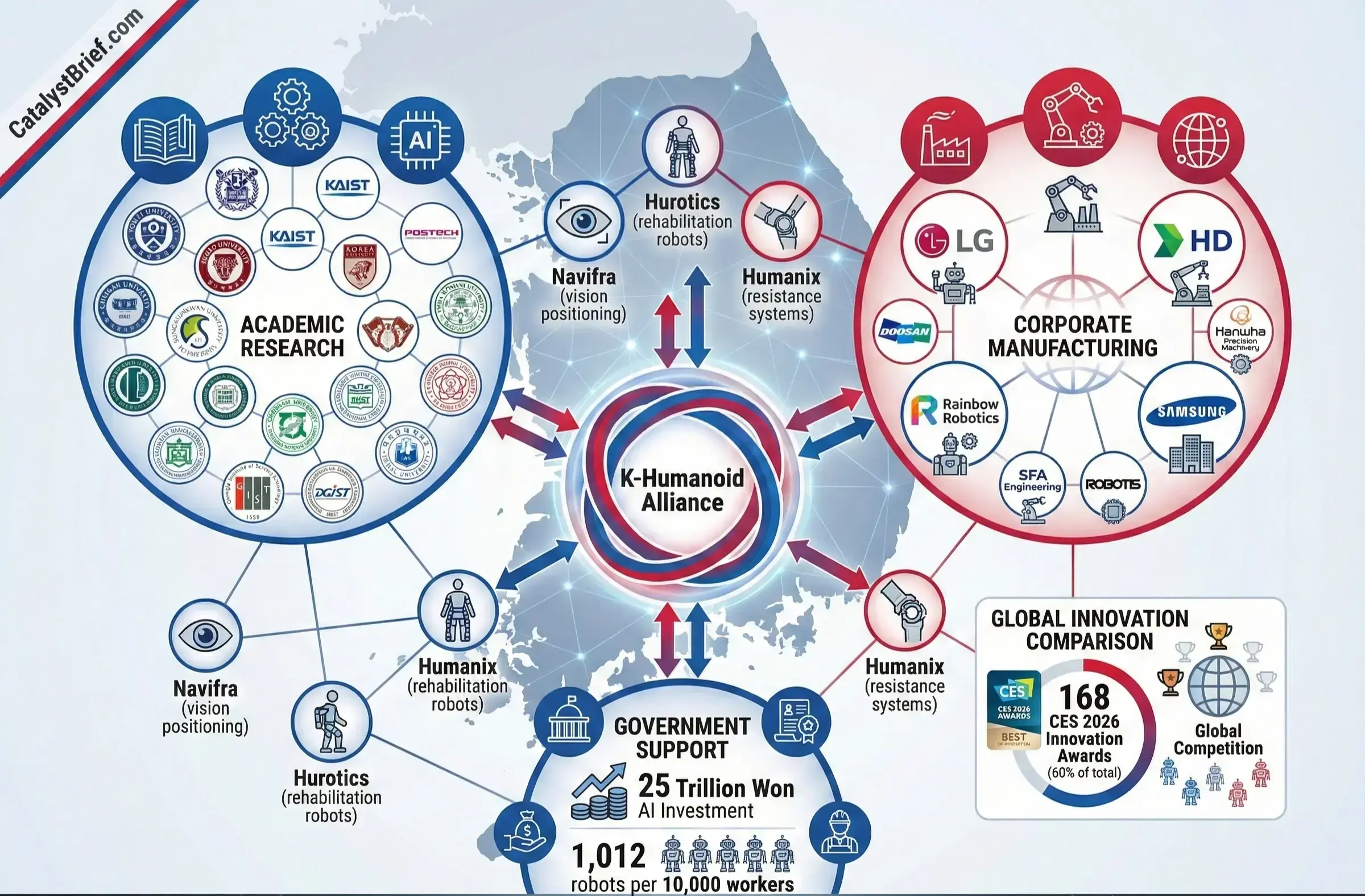

The Korean robotics ecosystem provides the clearest signal of where this market is heading. Korean companies captured 168 of 284 CES 2026 Innovation Awards – 60% of total honors, up from 131 awards in 2025. This isn’t vanity metrics. It reflects Korea’s systematic approach to robotics commercialization, backed by government investment of 25 trillion won in AI and the world’s highest robot density at 1,012 robots per 10,000 workers.

The K-Humanoid Alliance – a consortium of 20 major Korean universities and 224 member organizations including Rainbow Robotics, LG Electronics, and HD Hyundai Robotics – will operate a dedicated Robot Pavilion at CES 2026. This coordinated approach combining academic research, corporate manufacturing, and government support creates the ecosystem velocity that individual companies struggle to achieve alone.

Award-winning Korean startups reveal the diversity of practical applications. Navifra received recognition for vision-based precision positioning achieving millimeter-level accuracy without LiDAR or markers, simplifying autonomous mobility deployment. Hurotics and Humanix showcased rehabilitation robots using Empathy AI to improve life quality – Hurotics’ wearable gait-assist robot analyzes user movement for recovery, while Humanix’s smart resistance arm calibrates feedback based on user strength.

These aren’t science fiction demonstrations. They’re commercial products addressing specific market needs with clear value propositions. The pattern repeating across robotics categories suggests we’ve crossed from proof-of-concept to deployment phase.

The Display Technology Subplot: Samsung and LG’s Micro RGB Battle

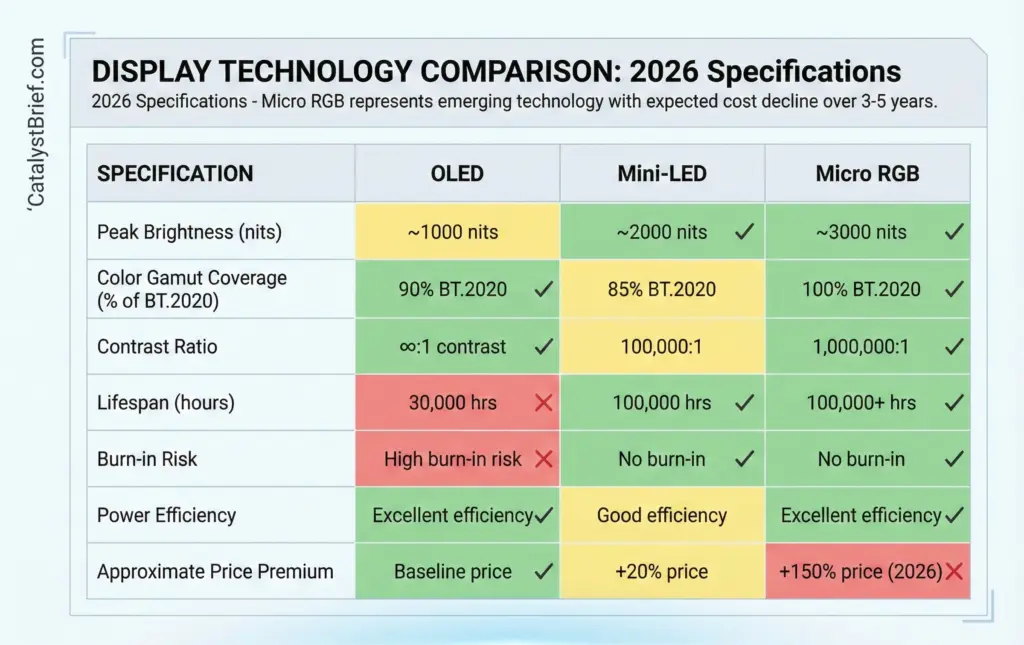

While robotics and semiconductors dominate the Physical AI narrative, a parallel technology war will unfold at CES 2026 that reveals how premium display markets evolve when manufacturing constraints ease. Samsung announced expansion of its Micro RGB TV lineup to include 55-, 65-, 75-, 85-, 100-, and 115-inch models, down from only a single 115-inch model in 2025 that cost $30,000 USD.

LG countered with its first Micro RGB evo TV announcement, promising the LG MRGB95 in 75-, 86-, and 100-inch sizes. Both companies are racing to claim leadership in RGB LED technology, where individual red, green, and blue LEDs replace traditional white LED backlights.

The technical achievement matters less than the competitive dynamics it reveals. Samsung and LG, longtime OLED rivals, are simultaneously pivoting to Micro RGB with 100% color gamut coverage across BT.2020, DCI-P3, and Adobe RGB standards. This coordinated shift suggests OLED’s limitations – particularly burn-in concerns and brightness constraints – are driving premium manufacturers toward alternatives.

Sony’s involvement adds weight to the trend. The company trademarked “True RGB” in Japan and Canada, signaling expected entry into RGB-based displays as part of its 2026 Bravia lineup. When three major manufacturers converge on identical technology paths within months, it indicates supply chain readiness and market timing alignment rather than independent innovation.

The broader lesson extends beyond television specifications. Manufacturing technology that debuts at $30,000 USD for a 115-inch screen scales down to 55 inches within 12 months, suggesting the manufacturing yield curves have been solved. This pattern – premium technology becoming accessible mainstream products within a year – will repeat across robotics and AI hardware categories.

Hyundai’s Physical AI Integration: From Concept to Manufacturing Reality

Hyundai Motor Group will unveil its comprehensive AI Robotics Strategy at CES 2026 under the theme “Partnering Human Progress.” The presentation on January 5 will spotlight expansion of AI robotics technology within Hyundai’s Group Value Network, outlining commercialization strategies through human-robot collaboration.

The most anticipated element: Boston Dynamics will publicly debut its new Atlas robot for the first time at CES. Since Hyundai’s acquisition of Boston Dynamics, the automotive conglomerate has been integrating the robotics company’s capabilities into manufacturing operations. The new Atlas represents this evolution – moving from research platform to production tool.

Hyundai’s approach differs from standalone robotics companies. The automaker is deploying what it calls Software-Defined Factory methodology, using robots not as isolated automation cells but as networked intelligence within broader manufacturing systems. This mirrors the automotive industry’s shift from mechanical systems to software-defined vehicles, applying the same architectural thinking to production facilities.

The Group’s exhibition from January 6-9 will showcase interactive scenarios illustrating AI Robotics’ practical impact in work environments, including recreations of research environments and hourly demonstrations of Atlas, Spot, and MobED robots. For executives evaluating Physical AI deployment, Hyundai’s integration provides the blueprint – how to move from pilot programs to enterprise-scale implementation.

The Inflection Point That Everyone Missed

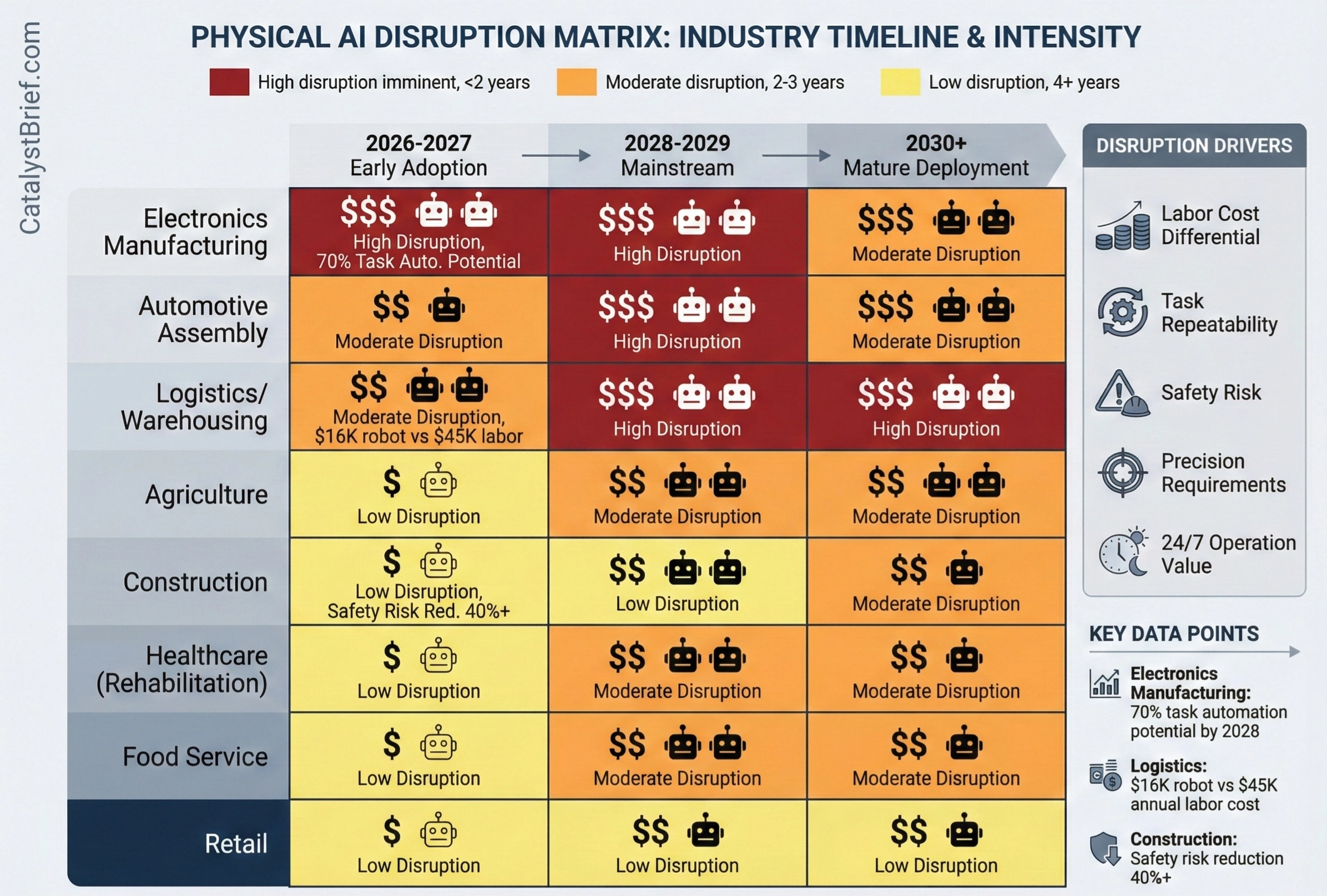

Here’s what most coverage of CES 2026 will overlook: the convergence of affordable humanoid robots, production-ready world models, and efficient edge AI processing doesn’t just automate existing processes. It enables entirely new manufacturing strategies that were economically impossible when human labor provided the flexibility.

Consider microfactories – small-scale production facilities capable of rapid reconfiguration for different products. With human workers, these require extensive training periods when switching product lines. With AI-powered robots utilizing world models, the same physical infrastructure can shift from assembling consumer electronics to medical devices to automotive components by downloading new task models and adjusting work cell layouts.

The implications ripple through supply chains. When production location decisions aren’t constrained by labor availability, manufacturers can position facilities based on proximity to customers, reducing shipping times and inventory carrying costs. This reverses decades of offshoring driven by labor cost differentials.

The semiconductor advances enabling this transformation also democratize AI development. When 180 TOPS of AI performance runs on laptop-class processors, startups can develop and test Physical AI applications without requiring data center infrastructure. This lowers barriers to entry for robotics companies and accelerates the pace of application development.

Investment and Career Implications

For investors evaluating the Physical AI landscape, CES 2026 provides critical data points on commercial readiness. The gap between demonstration and deployment has compressed dramatically. Korean companies shipping production robots at $16,000 USD price points change the investment thesis from “will this technology work?” to “how quickly can it scale?”

The semiconductor battle between Intel, AMD, and Qualcomm determines platform winners, but the actual value capture may occur several layers up the stack. Companies developing world model training frameworks, simulation environments, and vision-language-action models represent the picks-and-shovels opportunity in the Physical AI gold rush.

For engineers and technical professionals, the skill sets commanding premium compensation are shifting rapidly. Robotics expertise increasingly requires an understanding of transformer architectures, reinforcement learning, and multimodal AI systems. Mechanical engineering knowledge alone won’t suffice when robots’ capabilities are primarily determined by software.

Manufacturing engineers face particularly significant transitions. Factories optimized for human workers make different design tradeoffs than facilities designed for human-robot collaboration. Understanding how to integrate intelligent machines into production flows while maintaining quality control and safety standards becomes a distinct discipline.

The talent shortage in Physical AI development creates opportunity for those willing to skill up quickly. Universities haven’t yet adapted curricula to this convergence of robotics, AI, and advanced manufacturing. Self-directed learning and practical experience with emerging platforms will determine who captures the premium in this transitional period.

What Comes After CES

CES 2026 won’t resolve all questions about Physical AI’s trajectory. Critical challenges remain around safety standards, liability frameworks, and workforce transition support. But it will clarify which companies are shipping products versus showcasing prototypes, which technologies have overcome manufacturing constraints, and which use cases justify immediate investment.

The event’s real value emerges in the months following, when announced products actually ship, when promised specifications meet validation testing, and when early deployments reveal integration challenges that polished demonstrations concealed. The pattern from previous technology waves suggests winners emerge not from the flashiest launches but from the quietest successful deployments.

For executives planning 2026 technology investments, CES provides the opportunity to move from observation to engagement. The companies demonstrating functional robotics and AI systems at the show will be seeking pilot partners for commercial deployments. The semiconductor manufacturers launching new platforms need early adopters willing to develop applications showcasing their capabilities.

The question isn’t whether Physical AI will transform manufacturing, logistics, and service industries. The demonstrations at CES 2026 confirm that transformation is already underway. The relevant question is whether your organization positions itself as early adopter capturing competitive advantage or late follower implementing commoditized solutions.

The robot threading the needle in Shenzhen represents more than technical achievement. It signals the moment when artificial intelligence stopped being software abstraction and became physical reality reshaping economic fundamentals. CES 2026 is where the tech industry confronts that transition and begins figuring out what comes next.

The Catalyst’s Take

I predict that within 18 months, at least three Fortune 500 manufacturers will announce factory floor deployments of humanoid robots at scales exceeding 100 units per facility. The economics are already compelling – $16,000 USD robots working 24/7 deliver ROI that no CFO can ignore.

The real disruption won’t come from established robotics companies but from the convergence of semiconductor advances and world model AI. Intel’s 18A process delivering 180 TOPS of on-device AI processing transforms every laptop into a potential robotics development platform. This democratization will unleash applications we haven’t imagined.

The companies that will win aren’t those with the best robots – they’re the ones building platforms enabling thousands of developers to create specialized Physical AI applications. Watch for robotics app stores and world model marketplaces. That’s where value capture happens.

CES 2027 will mark the last year anyone questions whether Physical AI is “ready.” The only question then: who captured market share during 2026 when adoption was still voluntary rather than competitive necessity.